Use of machines to grade essays stirs debate

Updated: 2013-04-14 08:07

By John Markoff (The New York Times)

|

||||||||

|

EdX, the massive open online course initiative in Massachusetts, will let institutions use its automated software to grade essays at no cost. Gretchen Ertl for The New York Times |

Imagine taking a college exam, and, instead of getting a grade from a professor a few weeks later, clicking the "send" button when you are done and receiving a grade back instantly, your essay scored by a software program.

Now imagine that the system would immediately let you rewrite the test to try to improve your grade.

EdX, the nonprofit enterprise founded by Harvard University and the Massachusetts Institute of Technology to offer courses on the Internet, has introduced such a system and will make its automated software available free on the Web to any institution that wants to use it. The software uses artificial intelligence to grade essays and short written answers, freeing professors for other tasks.

Although automated grading systems for multiple-choice and true-false tests are now widespread, the use of artificial intelligence technology to grade essay answers has not yet received widespread endorsement by educators and has many critics.

Anant Agarwal, the president of EdX, predicted that the instant-grading software would be a useful pedagogical tool, enabling students to take tests and write essays over and over and improve the quality of their answers.

"There is a huge value in learning with instant feedback," Dr. Agarwal said. "Students are telling us they learn much better with instant feedback."

But skeptics say the automated system is no match for live teachers. One longtime critic, Les Perelman, has drawn attention several times for putting together nonsense essays that have fooled software grading programs into giving high marks.

"My first and greatest objection to the research is that they did not have any valid statistical test comparing the software directly to human graders," said Mr. Perelman, a retired director of writing and a current researcher at M.I.T.

He is among a group of educators circulating a petition opposing automated assessment software. The group - Professionals Against Machine Scoring of Student Essays in High-Stakes Assessment - has collected nearly 2,000 signatures.

"Let's face the realities of automatic essay scoring," the group's statement reads in part. "Computers cannot 'read.' They cannot measure the essentials of effective written communication: accuracy, reasoning, adequacy of evidence, good sense, ethical stance, convincing argument, meaningful organization, clarity, and veracity, among others."

The EdX assessment tool requires human teachers, or graders, to first grade 100 essays. The system then uses machine-learning techniques to train itself to be able to grade any number of essays or answers almost instantaneously.

The software will assign a grade depending on the scoring system created by the teacher, and it will provide general feedback, like telling a student whether an answer was on topic.

Dr. Agarwal said he believed that the software was nearing the capability of human grading. "This is machine learning and there is a long way to go, but it's good enough and the upside is huge," he said. "We found that the quality of the grading is similar to the variation you find from instructor to instructor."

EdX is not the first to use automated assessment technology, which dates to early mainframe computers in the 1960s. Several companies offer commercial programs to grade written test answers. In some cases the software is used as a "second reader," to check the reliability of the human graders.

Stanford University in California recently announced that it would work with EdX to develop a joint educational system that will incorporate the automated assessment technology.

Two start-ups, Coursera and Udacity, recently founded by Stanford faculty members to create "massive open online courses," or MOOCs, are also committed to automated assessment systems.

"It allows students to get immediate feedback on their work, so that learning turns into a game, with students naturally gravitating toward resubmitting the work until they get it right," said Daphne Koller, a founder of Coursera.

Last year the Hewlett Foundation sponsored two $100,000 prizes aimed at improving software that grades essays and short answers. Mark D. Shermis, a professor at the University of Akron in Ohio, supervised the Hewlett Foundation's contest. In his view, the technology - though imperfect - has a place in educational settings.

With increasingly large classes, it is impossible for most teachers to give students meaningful feedback on writing assignments, he said. Plus, he noted, critics of the technology have tended to come from America's best universities.

"Often they come from very prestigious institutions where, in fact, they do a much better job of providing feedback than a machine ever could," Dr. Shermis said. "There seems to be a lack of appreciation of what is actually going on in the real world."

The New York Times

All safe as plane misses Bali runway, lands in sea

All safe as plane misses Bali runway, lands in sea

Music that shines

Music that shines

US eyes 'strong, normal, special' ties with China

US eyes 'strong, normal, special' ties with China

China, US consider roadmap to boost ties

China, US consider roadmap to boost ties

Speeches and cream on foreign tour

Speeches and cream on foreign tour

Shops in scenic town back in business

Shops in scenic town back in business

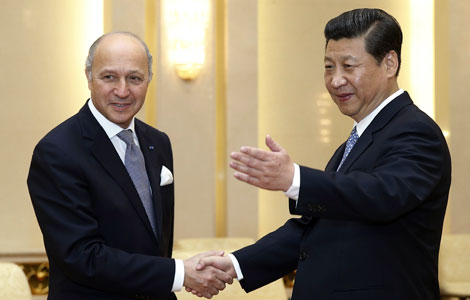

Xi urges close relations with France

Xi urges close relations with France

Bird flu takes toll on poultry industry

Bird flu takes toll on poultry industry

Most Viewed

Editor's Picks

|

|

|

|

|

|

Today's Top News

China calls for regional peace and stability

Innovation key to China's economy

Abbas accepts PM's resignation: official

Beijing confirms first H7N9 infection

US eyes 'strong, normal, special' ties with China

All safe as plane lands in Bali sea

China, US to hold 5th S&ED in July

Building collapse kills 2 in Guizhou

US Weekly

|

|